Misc

How AI-Powered Scams Are Quietly Taking Over Australia: What You Need to Know Now

Artificial intelligence has transformed how scams operate across Australia. Where fraudulent messages once stood out due to poor spelling, clumsy formatting or unprofessional design, today’s scams look polished and convincingly real. AI tools can generate emails that mirror official communication, create realistic deepfake voices and build fake websites that closely resemble legitimate platforms.

These advances make scams much harder to recognise. Many Australians now receive messages or calls that feel personalised, referencing real details from their online presence, workplaces or social media activity. Scammers are also using AI to mimic familiar voices or produce videos that appear to show trusted public figures promoting financial opportunities. The realism of these tactics lowers suspicion and increases the likelihood of victims responding quickly.

The scale of the problem continues to grow. According to Scamwatch, Australians lost more than $2.03 billion to scams in 2024, highlighting how widespread and sophisticated digital fraud has become.

Quick Summary: What Australians Need to Know

AI-powered scams in Australia now use deepfake voices, fake celebrity ads and realistic phishing pages. To protect yourself:

- Verify unexpected contact

- Avoid reacting to urgent instructions

- Enable multi-factor authentication

- Report suspicious activity to Scamwatch

Why AI Is Changing How Scams Work

Artificial intelligence has transformed the structure of scam attempts. Instead of relying on poorly written messages, scammers now use AI to craft communication that closely mirrors banks, government agencies and well-known brands. This removes the traditional red flags that once helped people identify suspicious content.

Voice-cloning has become particularly concerning. With only a short recording, scammers can replicate a person’s voice with surprising accuracy. This allows them to create urgent scenarios that sound legitimate — for example, a colleague asking for a quick transfer or a relative claiming they need immediate help. These situations pressure victims to act before they verify the message.

AI also allows scammers to personalise messages at scale. Information gathered from social media, public profiles or old databases can be woven into emails or messages, making them feel more credible. This level of detail increases trust and reduces a victim’s hesitation.

Fake online identities have also become much easier to construct. AI-generated photos, fabricated credentials and synthetic documents are now commonly used in investment scams, romance scams and fake job advertisements.

All of these factors contribute to a digital environment where fraudulent communication blends smoothly with genuine messages, making vigilance more important than ever.

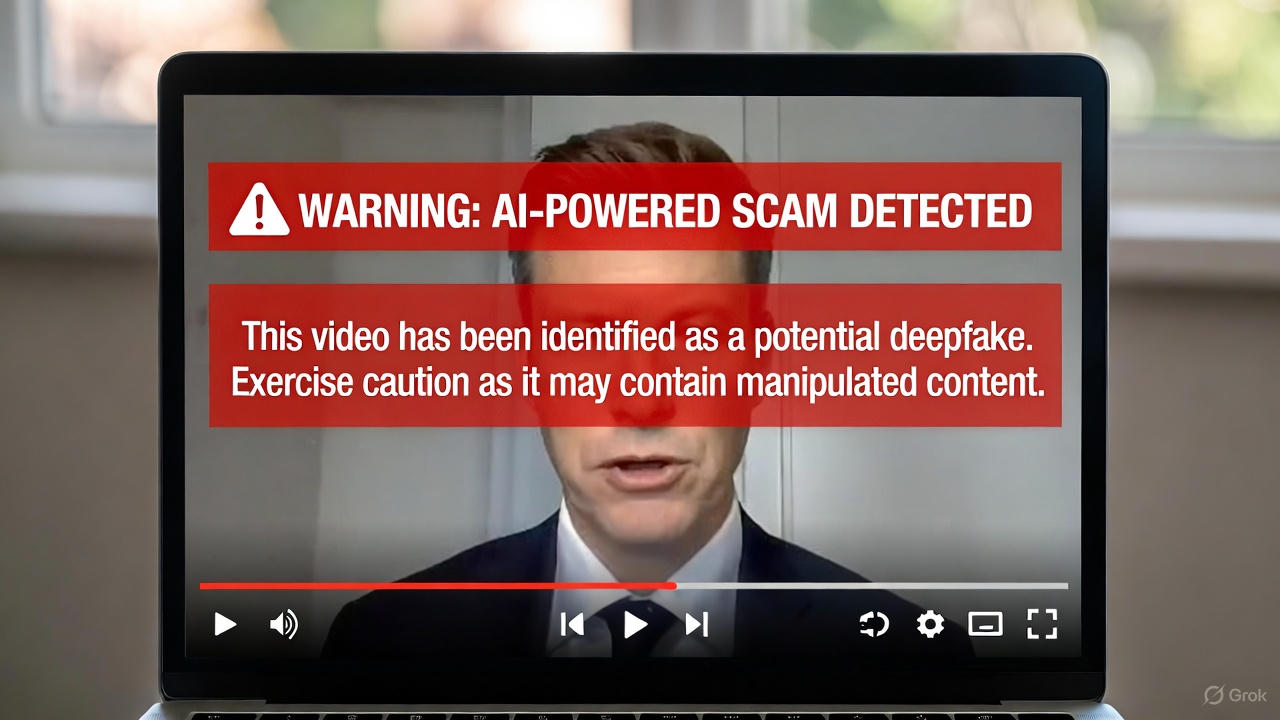

AI-Generated Scam Ads Using Public Figures

One of the fastest-growing scam types in Australia involves AI-generated videos and ads impersonating well-known public figures. These ads appear on platforms such as YouTube, Facebook and Instagram, showing deepfake versions of politicians, business leaders, news presenters or other influencers endorsing investment schemes or financial opportunities.

The videos look authentic and the voices sound real, giving viewers a false sense of trust. Once clicked, these ads typically direct victims to fake investment platforms or websites designed to capture personal and financial information. This scam format has become increasingly convincing due to advances in generative AI tools.

Scamwatch warns that scammers commonly use fabricated celebrity endorsements in online investment schemes and encourages Australians to be cautious of any advertisement claiming guaranteed returns or exclusive access.

Common AI-Powered Scams in Australia

AI-generated phishing remains one of the most widespread threats. These emails and messages are well-formatted, free of errors and often reference legitimate services such as banks, parcel delivery companies or government agencies. The links lead to fake websites that accurately mimic the real ones, tricking people into entering sensitive information.

Deepfake phone calls are also becoming more common. Criminals clone a person’s voice, then create urgent scenarios to prompt immediate action. The emotional pressure makes it easy for victims to act before they double-check the call.

Fake investment platforms are another major issue. They often feature AI-generated videos, false dashboards and fabricated testimonials. Because these sites look professional and include persuasive messaging, victims believe they are interacting with real financial services.

Customer service impersonation is also on the rise. Scammers pretend to represent telecommunications companies, energy retailers or the NBN. They deliver scripted messages that imitate real support agents and attempt to collect passwords or gain remote access to devices.

Businesses face renewed risk in the form of fraudulent invoices. AI allows scammers to match company writing styles, email formats and branding elements, making it difficult for staff to distinguish genuine payment requests from fraudulent ones.

Who Is Most at Risk in Australia?

AI-powered scams can impact anyone, but certain groups face higher exposure. Older Australians are frequently targeted because they may be less familiar with deepfake technology and the evolving nature of online threats. This makes it easier for scammers to exploit trust and urgency.

Individuals who spend a lot of time online are also at greater risk simply because they interact with more digital channels. The more information available publicly, the easier it becomes for criminals to tailor their scams.

Small businesses are particularly vulnerable as they often operate with limited resources and rely heavily on email communication. Without strict verification processes, fraudulent invoices and impersonation attempts can slip through unnoticed.

Larger organisations face more structured attacks. Scammers research senior staff members, communication styles and business routines. With this information, they create messages that appear authentic, increasing the chance of financial or data-related breaches.

How to Protect Yourself and Your Business

Australians can significantly reduce their exposure to AI-powered scams by adopting practical safety habits. Awareness is the first step, followed by consistent verification and secure digital behaviour.

Key Protection Steps:

- Always verify unexpected communication using a trusted phone number

- Enable multi-factor authentication on important accounts

- Check website addresses carefully before entering details

- Question urgent or emotional requests, even if the message sounds familiar

- Keep devices and apps updated to reduce vulnerabilities

Businesses should review internal processes to ensure payments are verified through more than one channel. Training staff to recognise suspicious requests, especially those involving financial details, can prevent costly mistakes. Families should also discuss emerging scam types to ensure all members understand how AI impersonation works.

For authoritative guidance on protecting yourself from scams, the Australian Cyber Security Centre provides practical advice on identifying and responding to suspicious activity.

The Road Ahead for Australia

AI-powered scams will continue to evolve as technology progresses. Deepfake video calls, AI-generated identity documents and hyper-personalised scam messages may become increasingly common. Because scammers adopt new tools quickly, Australians must stay informed and adapt their digital habits.

Remaining updated through trusted sources, practising verification and developing cautious online behaviour will play a crucial role in reducing risk. The rise of AI-driven scams highlights the importance of awareness as a frontline defence.

Frequently Asked Questions

What is the most common AI-powered scam in Australia?

Phishing remains the most common AI-driven scam because scammers can create emails and websites that closely resemble legitimate organisations. These messages often look polished, personalised and trustworthy, making it harder for people to recognise the danger.

Can scammers really clone voices accurately?

Yes. With only a few seconds of audio, scammers can create a voice clone that sounds very similar to a real person. These calls often involve urgent or emotional requests designed to make victims act quickly before verifying the situation.

Are businesses more at risk than individuals?

Businesses often face higher-value losses due to invoice manipulation, executive impersonation and payment redirection. However, individuals are also heavily targeted through fake investment platforms, deepfake calls and scam ads using public figures.

How do I know if a video of a public figure is a deepfake scam?

Deepfake scam videos may show slightly mismatched lip movements, unnatural expressions or unusual audio timing. If a public figure appears to promote investment schemes, lotteries or financial programs promising high returns, treat the video as suspicious. Legitimate public figures do not advertise such opportunities.

Why are AI-generated scams becoming so successful in Australia?

AI makes it easy for scammers to create messages, videos and phone calls that look and sound authentic. These personalised and professional-looking communications reduce scepticism and encourage quick responses, especially when urgency or fear is used.

What should I do if I clicked on a suspicious link?

If you clicked a suspicious link, close the page immediately and avoid entering any details. Change your passwords, enable multi-factor authentication and watch your accounts for unusual activity. If you believe your information has been compromised, take further steps to secure your accounts and devices.

Are AI-powered scams aimed at specific age groups?

AI-powered scams target every age group. Older Australians are often targeted because scammers assume they may be less familiar with new technologies. Younger people are also at risk because their online activity provides scammers with more data to personalise and tailor attacks.

Can banks or government agencies use AI in their communication?

Some organisations use automated tools, but legitimate banks and government agencies will never request sensitive details through unexpected emails, popups or urgent phone calls. Any communication that pressures you to act instantly should be treated with caution.

You must be logged in to post a comment Login